AI Model Achieves Clinical Expert Level Accuracy in Analyzing Complex MRIs and 3D Medical Scans

Posted on 07 Oct 2024

Artificial neural networks train by performing repeated calculations on large datasets that have been carefully examined and labeled by clinical experts. While standard 2D images display length and width, 3D imaging technologies introduce depth, creating "volumetric" images that require more time, skill, and attention for expert interpretation. For instance, a 3D retinal imaging scan may consist of nearly 100 2D images, necessitating several minutes of close examination by a highly trained specialist to identify subtle disease biomarkers, such as measuring the volume of an anatomical swelling. Now, researchers have developed a deep-learning framework that rapidly trains itself to automatically analyze and diagnose MRIs and other 3D medical images, achieving accuracy comparable to medical experts but in a fraction of the time.

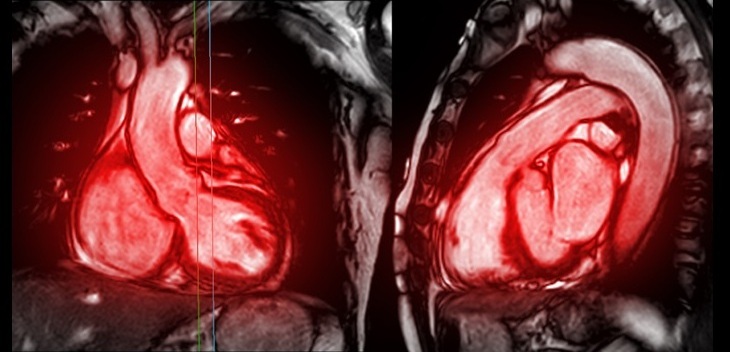

Unlike other models being developed for 3D image analysis, the new framework created by researchers at UCLA (Los Angeles, CA, USA) is highly adaptable across various imaging modalities. It has been studied with 3D retinal scans (optical coherence tomography) for disease risk biomarkers, ultrasound videos for heart function assessment, 3D MRI scans to evaluate liver disease severity and 3D CT scans for chest nodule malignancy screening. In a paper published in Nature Biomedical Engineering, the researchers highlight the broad capabilities of the system, suggesting that it could be valuable in many other clinical settings. Additional studies are planned to further explore its applications.

The UCLA model, named SLIViT (SLice Integration by Vision Transformer), features a unique combination of two artificial intelligence components and a specialized learning approach. According to the researchers, this combination enables it to accurately predict disease risk factors from medical scans across multiple volumetric modalities, even with moderately sized labeled datasets. SLIViT’s automated annotation could benefit both patients and clinicians by enhancing diagnostic efficiency and timeliness, while also advancing medical research by reducing data acquisition costs and shortening the time required for data collection. Additionally, it establishes a foundational model that can expedite the development of future predictive models.

“SLIViT overcomes the training dataset size bottleneck by leveraging prior ‘medical knowledge’ from the more accessible 2D domain,” said Berkin Durmus, a UCLA PhD student and co-first author of the article. “We show that SLIViT, despite being a generic model, consistently achieves significantly better performance compared to domain-specific state-of-the-art models. It has clinical applicability potential, matching the accuracy of manual expertise of clinical specialists while reducing time by a factor of 5,000. And unlike other methods, SLIViT is flexible and robust enough to work with clinical datasets that are not always in perfect order.”