Tongue Ultrasound System Improves Speech Therapy

By MedImaging International staff writers

Posted on 26 Oct 2017

A new study describes how an innovative visual biofeedback system based on an ultrasound probe placed under the jaw can help treat speech impediments.Posted on 26 Oct 2017

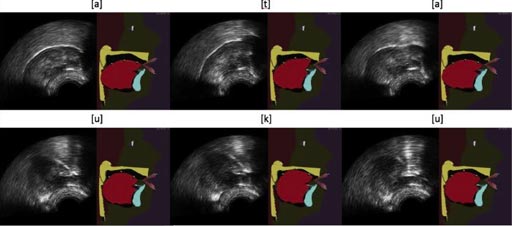

Developed by researchers at GIPSA-Lab (Saint-Martin-d'Hères, France) and INRIA Grenoble Rhône-Alpes (INRIA; Montbonnot-Saint-Martin, France), the system uses ultrasound images to display tongues movements in real time via an animated articulatory talking head, which serves as a virtual clone of the speaker. Besides the face and lips, the avatar shows the tongue, palate and teeth, which are usually hidden inside the vocal tract. Another version of the system (under development) automatically animates the articulatory talking head not by ultrasounds, but directly by the user’s voice.

Image: Articulatory talking head animations from ultrasound images for “ata” (top) and “uku” (bottom) (Photo courtesy of Thomas Hueber / GIPSA-Lab).

The strength of this new system lies in an integrated cascaded gaussian mixture regression algorithm that can process articulatory movements required for targeted therapeutic applications. The algorithm exploits a probabilistic model based on a large articulatory database acquired from an “expert” speaker capable of pronouncing all of the sounds in one or more languages. The model is automatically adapted to the morphology of each new user, over the course of a short system calibration phase, during which the patient must pronounce a few phrases. The study was published in the October 2017 issue of Speech Communication.

“Automatic animation of an articulatory tongue model from ultrasound images of the vocal tract using visual biofeedback is the process of gaining awareness of physiological functions through the display of visual information,” concluded lead author Diandra Fabre, MSc, of GIPSA-Lab, and colleagues. “As speech is concerned, visual biofeedback usually consists in showing a speaker his/her own articulatory movements, which has proven useful in applications such as speech therapy or second language learning.”

Speech therapists specialize in the evaluation, diagnosis, and treatment of communication disorders, cognitive-communication disorders, voice disorders, and swallowing disorders, and play an important role in the diagnosis and treatment of autism spectrum disorder.

Related Links:

GIPSA-Lab

INRIA Grenoble Rhône-Alpes