AI Tool Performs as Good as Experienced Radiologists in Interpreting Chest X-rays

By MedImaging International staff writers

Posted on 23 Dec 2019

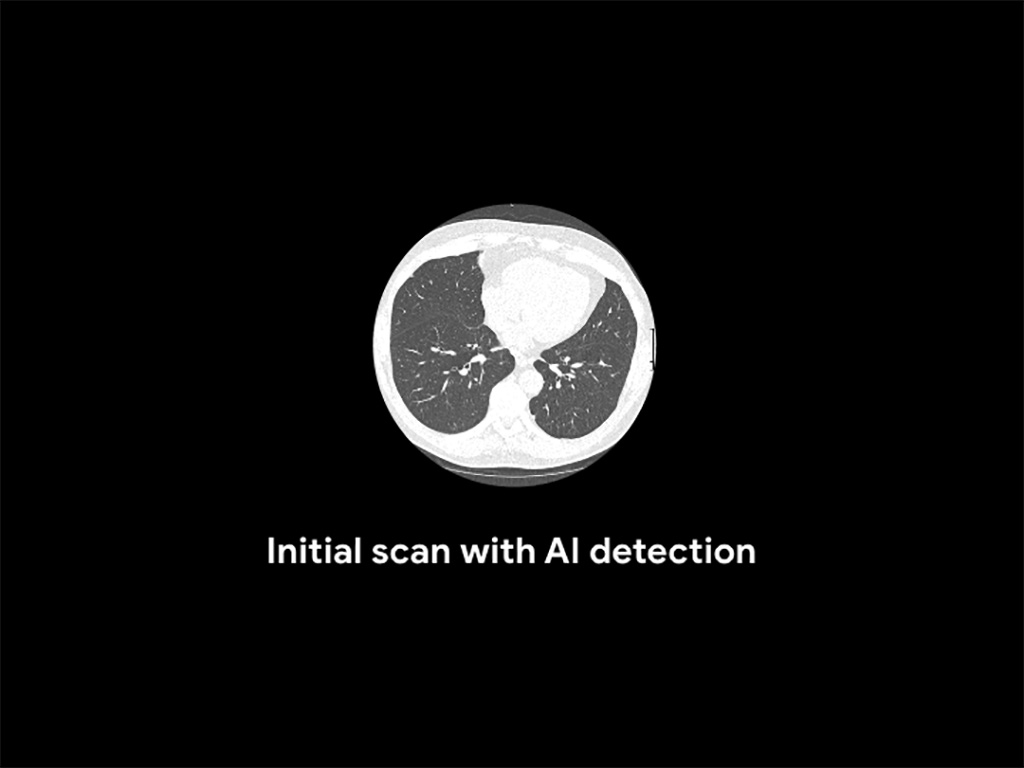

A team of researchers from Google Health (Palo Alto, CA, USA) have developed a sophisticated type of artificial intelligence (AI) that can detect clinically meaningful chest X-ray findings as effectively as experienced radiologists. Their findings, based on a type of AI called deep learning, could provide a valuable resource for the future development of AI chest radiography models.Posted on 23 Dec 2019

Chest radiography, or X-ray, one of the most common imaging exams worldwide, is performed to help diagnose the source of symptoms like cough, fever and pain. Despite its popularity, the exam has limitations. Deep learning, a sophisticated type of AI in which the computer can be trained to recognize subtle patterns, has the potential to improve chest X-ray interpretation, but it too has limitations. For instance, results derived from one group of patients cannot always be generalized to the population at large.

Illustration

Researchers at Google Health developed deep learning models for chest X-ray interpretation that overcome some of these limitations. They used two large datasets to develop, train and test the models. The first dataset consisted of more than 750,000 images from five hospitals in India, while the second set included 112,120 images made publicly available by the National Institutes of Health (NIH). A panel of radiologists convened to create the reference standards for certain abnormalities visible on chest X-rays used to train the models.

Tests of the deep learning models showed that they performed on par with radiologists in detecting four findings on frontal chest X-rays, including fractures, nodules or masses, opacity (an abnormal appearance on X-rays often indicative of disease) and pneumothorax (the presence of air or gas in the cavity between the lungs and the chest wall). Radiologist adjudication led to increased expert consensus of the labels used for model tuning and performance evaluation. The overall consensus increased from just over 41% after the initial read to more than almost 97% after adjudication.

"Chest X-ray interpretation is often a qualitative assessment, which is problematic from deep learning standpoint," said Daniel Tse, M.D., product manager at Google Health. "By using a large, diverse set of chest X-ray data and panel-based adjudication, we were able to produce more reliable evaluation for the models."

Related Links:

Google Health

Guided Devices.jpg)