Deep Learning-Based System Detects and Classifies Mammogram Masses

By MedImaging International staff writers

Posted on 16 Aug 2018

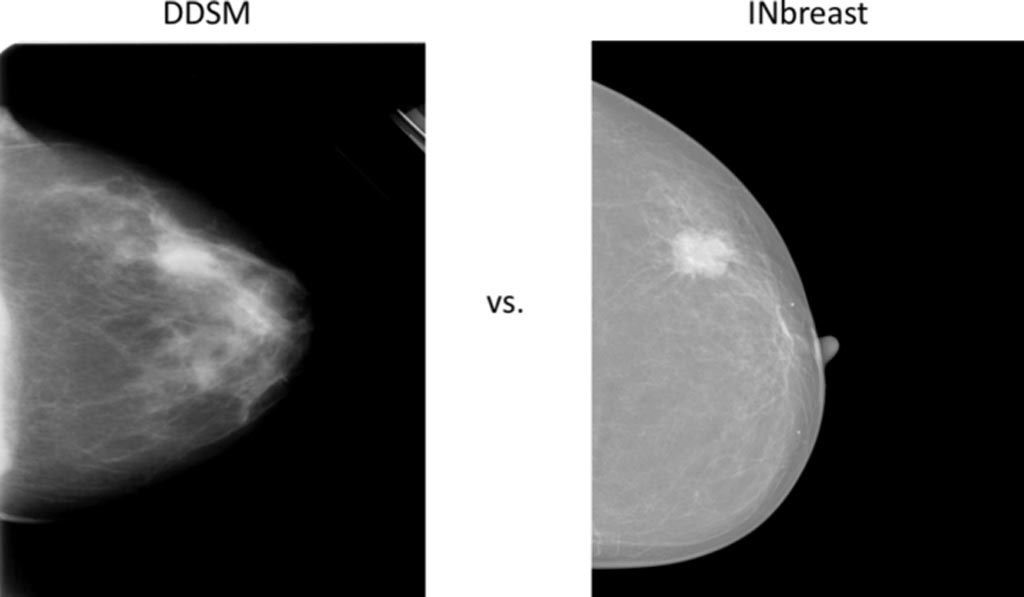

Researchers from the Kyung Hee University (Yongin, South Korea) have developed a fully integrated computer-aided diagnosis (CAD) system that uses deep learning and a deep convolutional neural network (CNN) to detect, segment and classify masses from mammograms. In a new study published by the International Journal of Medical Informatics, the researchers have described the use of their regional deep learning model, You-Only-Look-Once (YOLO), to detect breast mass from entire mammograms. The researchers then went on to use a new deep network model based on a full resolution convolutional network (FrCN), to segment the mass lesions pixel-to-pixel. Finally, a deep CNN was used to recognize the mass and classify it as either benign or malignant.Posted on 16 Aug 2018

The researchers used the publicly available and annotated INbreast database to evaluate the integrated CAD system’s accuracy in detection, segmentation, and classification. According to the researchers, the evaluation results of the proposed CAD system via four-fold cross-validation tests showed a mass detection accuracy of 98.96%, Matthews correlation coefficient (MCC) of 97.62%, and F1-score of 99.24% with the INbreast dataset. The mass segmentation results via FrCN demonstrated an overall accuracy of 92.97%, MCC of 85.93%, and Dice (F1-score) of 92.69% and Jaccard similarity coefficient metrics of 86.37%, respectively. The detected and segmented masses classified via CNN achieved an overall accuracy of 95.64%, AUC of 94.78%, MCC of 89.91%, and F1-score of 96.84%, respectively.

Image: Comparison of two example mammograms from DDSM and INbreast (Photo courtesy of ResearchGate).

The researchers concluded that that the CAD system outperformed the latest conventional deep learning methodologies and will assist radiologists in all the stages of detection, segmentation, and classification.

Related Links:

Kyung Hee University