AI Fuses CT and MRI Scans for Improved Clinical Diagnosis

Posted on 30 Jun 2023

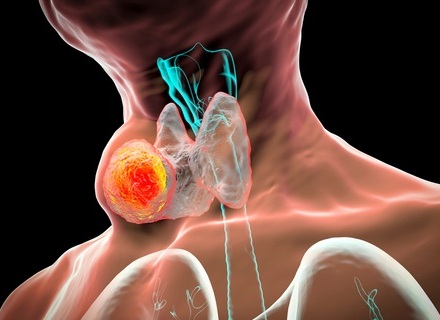

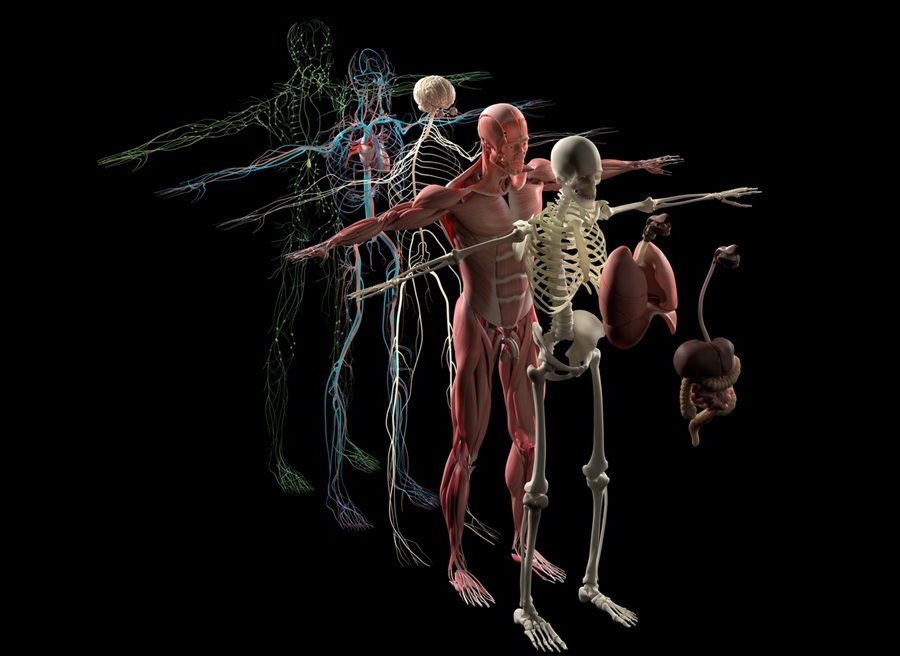

Computed tomography (CT) imaging uses X-ray technology to take detailed cross-sectional images of the body, which are then converted into a 3D visualization of bone structures that are opaque to X-rays. On the other hand, magnetic resonance imaging (MRI) uses powerful magnetic fields and radio waves to generate precise images of soft tissues such as organs or damaged tissues. Combining these two techniques could offer healthcare professionals a more holistic view of a patient's anatomy, uncovering hidden aspects of their health issues. Now, new research has demonstrated how artificial intelligence (AI) can be utilized to combine images from clinical X-ray CT and MRI scans.

The new method, known as the Dual-Branch Generative Adversarial Network (DBGAN), has been developed by researchers at Queen Mary University of London (London, UK) and Shandong University of Technology (Zibo, China) holds the potential for enabling a clearer and more clinically valuable interpretation of CT and MRI scans. This technique effectively merges the rigid bone structures from the CT scan with the detailed soft tissue imaging from the MRI. This development could enhance clinical diagnosis and patient care for a multitude of conditions where such scans are commonly used but exhibit limitations when utilized separately.

DBGAN is an advanced AI approach based on deep-learning algorithms, featuring a dual-branch structure with multiple generators and discriminators. The generators produce fused images that blend the key features and additional information from CT and MRI scans. The discriminators evaluate the quality of the generated images by comparing them to real images and filtering out lower-quality ones until a high-quality fusion is achieved. This generative adversarial interaction between generators and discriminators enables the efficient and realistic fusion of CT and MRI images, minimizing artifacts and maximizing visual information.

The dual nature of DBGAN includes a multi-scale extraction module (MEM) which focuses on extracting key features and detailed information from the CT and MRI scans and a self-attention module (SAM) which highlights the most relevant and unique features in the fused images. Comprehensive testing of the DBGAN approach has shown its performance to be superior as compared to existing techniques in terms of image quality and diagnostic accuracy. As CT and MRI scans each have their own strengths and weaknesses, the application of AI can help radiographers to synergistically combine both types of scans, maximizing their strengths and eliminating their weaknesses.

Related Links:

Queen Mary University of London

Shandong University of Technology