AI-Reconstructed Medical Images May Be Unreliable

By MedImaging International staff writers

Posted on 16 Jun 2020

A new study suggest that the deep learning tools used to create high-quality images from short scan times produce multiple alterations and artefacts in the data that could affect diagnosis.Posted on 16 Jun 2020

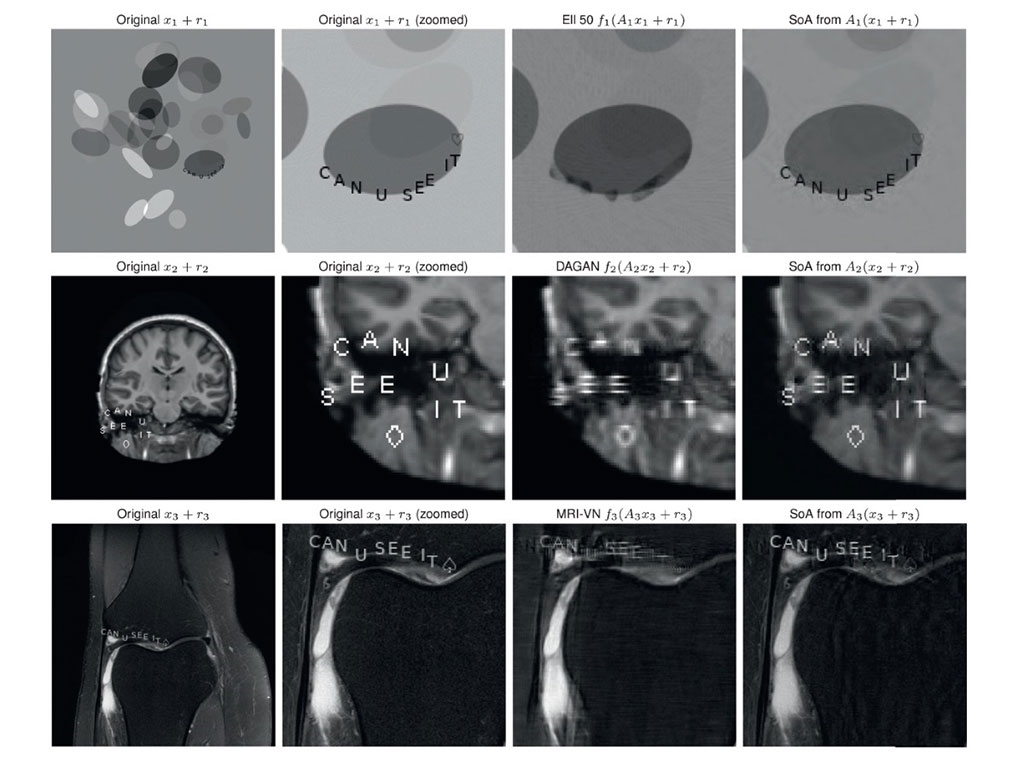

Researchers at the University of Oslo (Norway), the University of Cambridge (United Kingdom), and other institutions conducted a study to test six different artificial intelligence (AI) neural networks trained to create enhanced images from magnetic resonance imaging (MRI) or computerized tomography (CT) scans. The networks were fed data designed to replicate three possible issues: tiny perturbations; small structural changes; and changes in the sampling rate compared with the data on which the AI was trained on. To test the ability of the systems to detect small structural changes, the team added letters and symbols from playing cards to the images.

Image: Images with small structural perturbations (text and symbols) reconstructed with AI (Photo courtesy of PNAS)

The results showed that only one of the networks was able to reconstruct these details, but the other five presented issues ranging from blurring to almost complete removal of the changes. Only one of the neural networks produced better images as the researchers increased the sampling rate of the scans. Another network stagnated, with no improvement in quality; and in three, the reconstructions dropped in quality as the number of samples increased. The sixth AI system did not allow the sampling rate to be changed. The study was published on May 11, 2020, in the Proceedings of the National Academy of Sciences (PNAS).

“You take a tiny little perturbation and the AI system says the image of the cat is suddenly a fire truck; researchers need to start testing the stability of these systems. What they will see on a large scale is that many of these AI systems are unstable,” said senior author Anders Hansen, PhD, of the University of Cambridge. “The big, big, problem is that there is no mathematical understanding of how these AI systems work. They become a black box, and if you don’t test these things properly you can have completely disastrous outcomes.”

Instabilities during scanning can appear as certain tiny, almost undetectable perturbations (for example due to patient movement, that appear in both the image and sampling domain, resulting in artefacts in the reconstruction; as small structural changes, for example, a tumor, that may not be captured in the reconstructed image; and differing sampling rates that do not coincide with the data on which the AI algorithm was trained.

Related Links:

University of Oslo

University of Cambridge